"To ban or not to ban?" This Shakespearean dilemma plagues game developers. In the ever-evolving landscape of online gaming, maintaining player safety and upholding fair play is no easy task. At ACE, we’ve come to understand that traditional punitive methods—like account bans and gameplay restrictions—are no longer sufficient on their own. While such measures remove harmful behavior from the ecosystem, they often fail to address the root causes behind players’ actions, especially when it comes to ambiguous behaviors like strategic AFK or toxic communication.

This article shares insights from ACE security team, based on a comprehensive approach developed by Alex Zhou and his team member.

Why Punishment Alone Falls Short

At first glance, cheating or hacking seems easy to handle—just punish the offenders. But the reality is far more nuanced. Many problematic behaviors fall into a "gray zone," where intention and impact aren’t always clear. For example, is a player throwing a match intentionally, or just having a bad day?

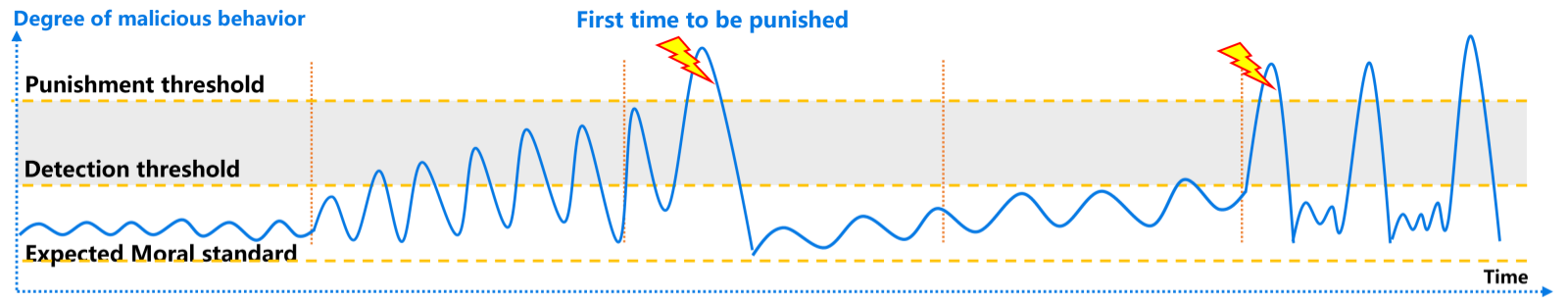

Our early research, starting in 2021, revealed that many players who were eventually punished had long histories of boundary-testing behavior. They often operated in a space between detection and punishment thresholds—where rule violations were present but not severe enough to trigger sanctions.

Unfortunately, even after punishment, many players simply switch accounts or devices and continue their negative behavior. Why? Because the perceived benefits of rule-breaking outweigh the consequences. Clearly, punishment alone isn’t enough.

A Shift Toward Proactive Interventions

Understanding Player Risk Tiers

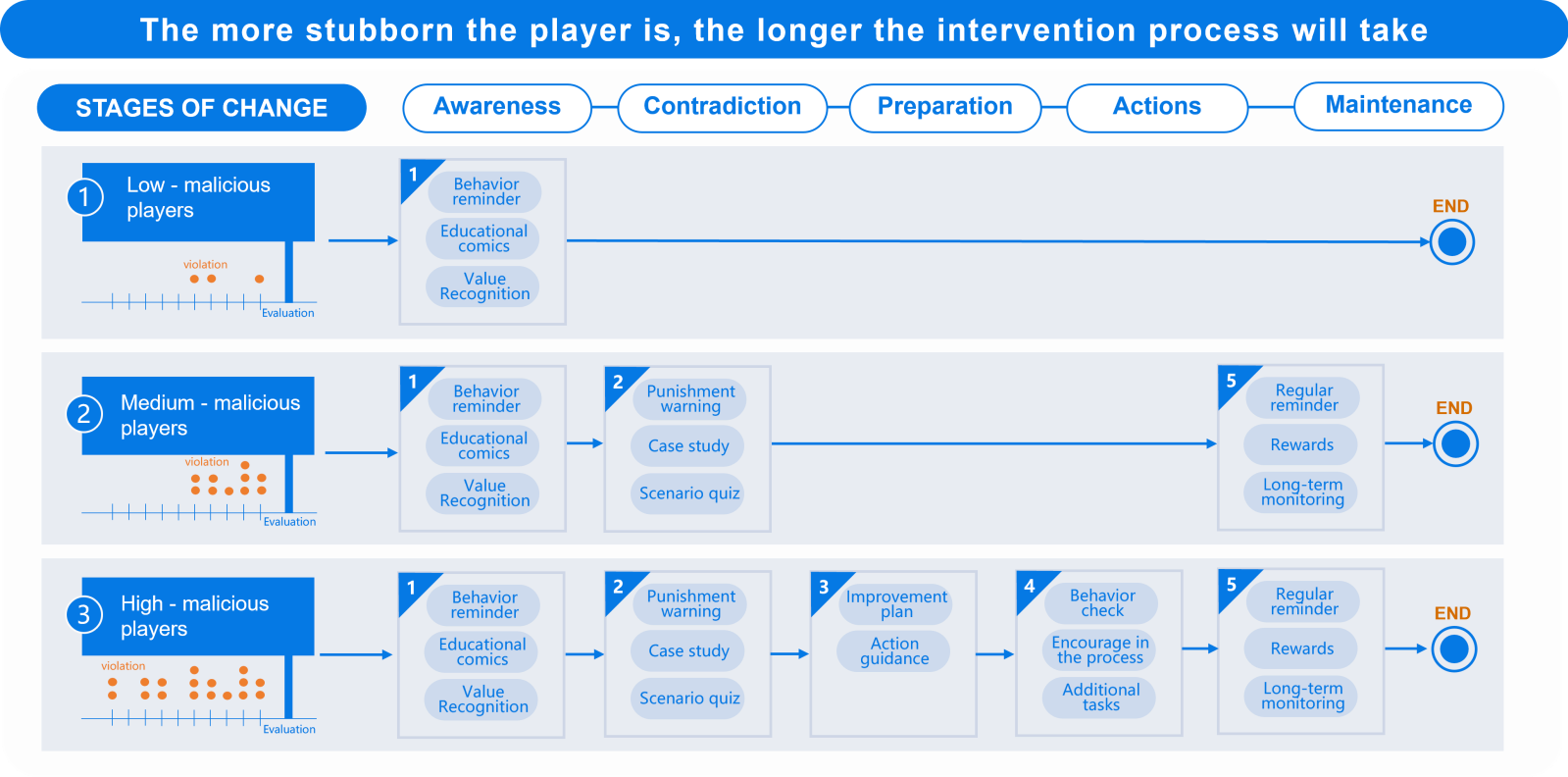

To address this, ACE developed a proactive intervention system that engages players before they reach the threshold for punishment. This serves a dual purpose: It reduces the shock of sudden account restrictions while simultaneously reshaping how players perceive and adjust their in-game conduct. The intervention process starts with identifying behavior patterns and dynamically profiling players based on risk levels:

1. Low-Risk Players: Occasional slip-ups, minimal impact. Ideal candidates for soft interventions.

2. Medium-Risk Players: Repeated boundary-pushing. High priority for targeted interventions.

3. High-Risk Players: Chronic offenders with entrenched habits. Require strict punitive action.

Using models like RFM (Recency, Frequency, Monetary) and metrics like “Non-Violation Length,” we can better understand a player’s behavioral trajectory and potential for reform.

Clarifying Rule Violations

Many players misbehave not out of malice, but confusion. For instance, in MOBA games, actions like jungle stealing or AFKing often fall into rulebook gray zones. We categorize violations into 4 types:

1. Non-Negotiables – Severe violations like black-market trading or real-world crimes

2. Niche Violations – Exploitative teaming ('booster squads’), off-platform transactions

3. Ambiguous Boundaries – Toxic chat vs. casual trash talk, sexual content vs. artistic expression

4. Unclear Consequences – Mismatched punishment intensity, inconsistent penalty escalation

We identified 57 unique scenarios where intervention could replace punishment—especially in games with rigid sanction systems where flexibility is limited.

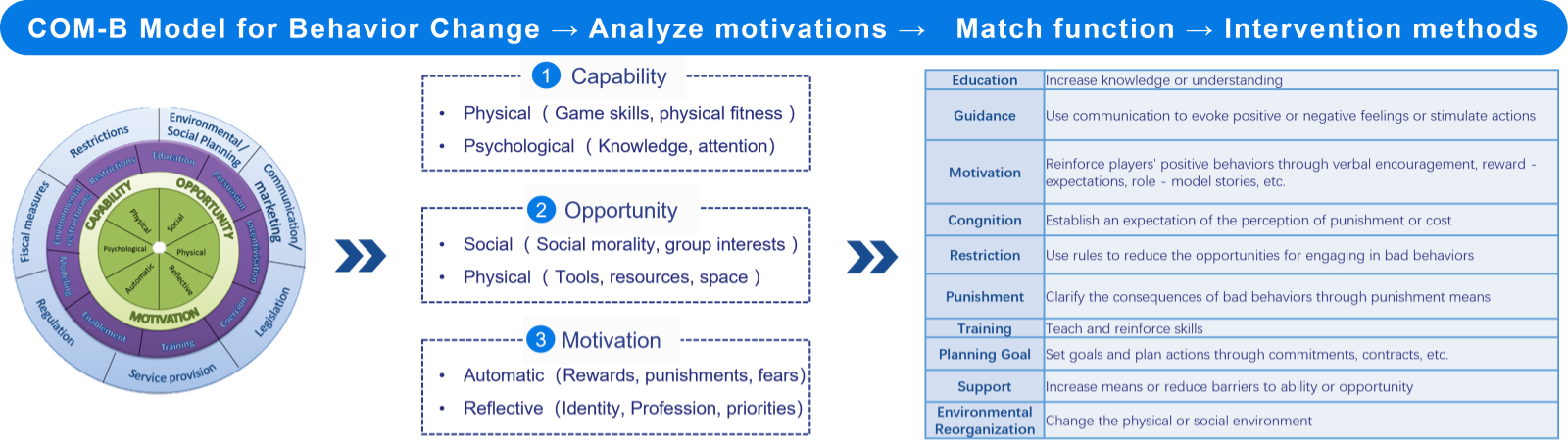

The COM-B Model

To design effective interventions, we rely on the COM-B model—Capability, Opportunity, Motivation. This behavioral framework helps us understand why players act out and how to guide them toward improvement.

Taking LOL's players as an example, COM-B reveals three intervention points:

1. Lack of team spirit understanding

2. Unclear punishment expectations

3. Low match impact awareness

Rather than expecting perfection, our goal is to set a baseline for responsible play.

Tailoring the Intervention Journey

Changing player behavior isn’t about finger-pointing—it’s about guiding growth. Most players aren’t “bad” people; they’re simply caught in cycles of poor gaming habits, often driven by stress, impulsivity, or a lack of awareness.

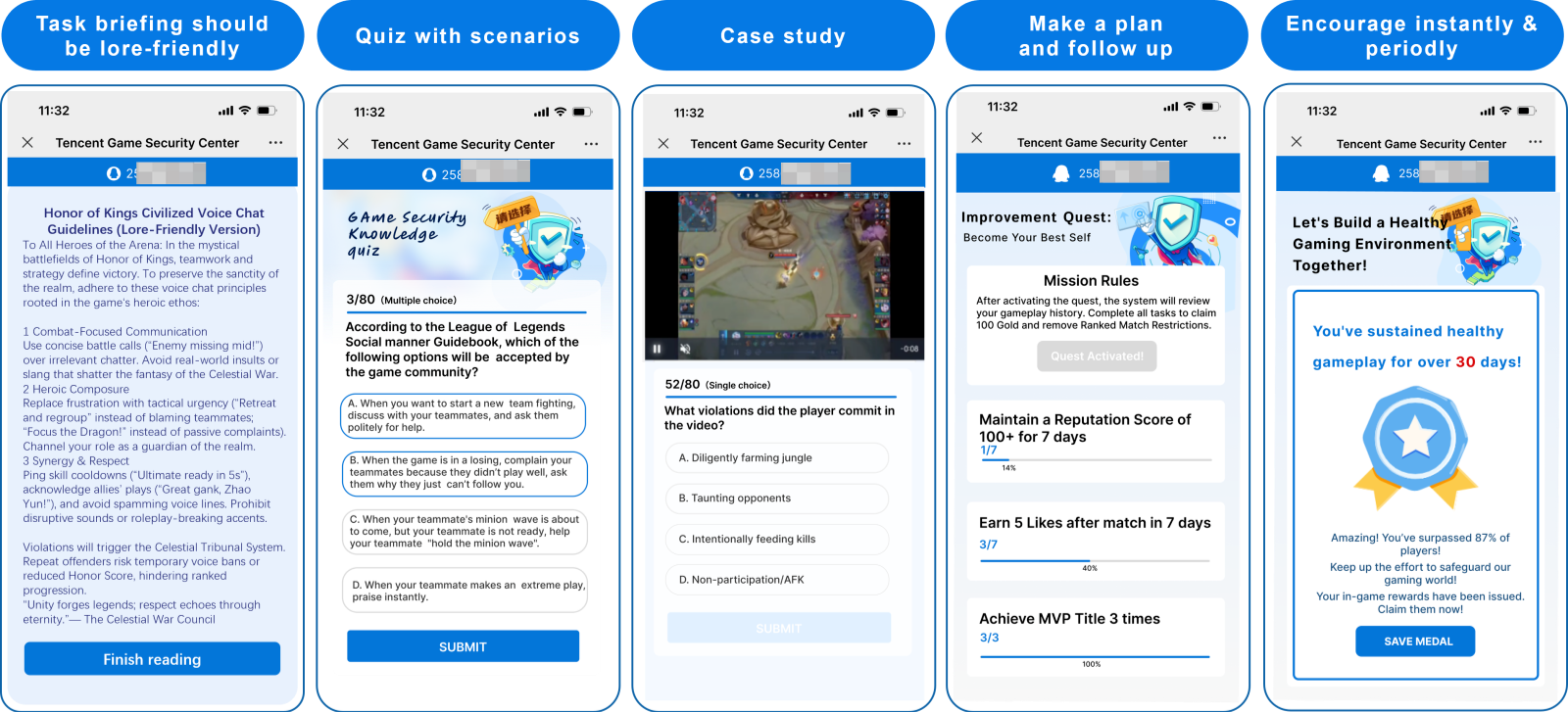

That’s why ACE breaks down interventions into manageable steps:

1. Background: Explain why a player has been flagged, what the process entails, and what they can gain from completing it.

2. Pre-Intervention Insights: Guide players to reflect on their gaming style (e.g., casual, risk-taker, strategist).

3. Behavior Guidelines: Clear definitions of acceptable behavior, reinforced with consequences and incentives.

4. Consequences & Incentives: Clearly warn players about penalties (e.g., chat bans, rank deductions) for violations, and highlight rewards (e.g., exclusive skins) for positive behavior.

5. Player Empowerment: In-game supports like chat filters and reporting systems.

6. Re-assessing Cognition: Evaluate the player’s understanding and commitment to change.

7. Player Action Plan: Players articulate improvement strategies and envision positive outcomes.

8. Closing Strategy: Use psychological effects like the “Peak-End Rule” to leave a lasting impression of support and recognition.

These interventions can be delivered via temporary matchmaking restrictions, interactive tutorials, or even comic-style educational content that aligns with the game’s universe.

One of the Play Intervention Journeys ACE is Currently Doing

Another approach is long-term behavior shaping. Some players require customized, personalized intervention. For them, we apply the Stages of Change model: Awareness → Conflict → Preparation → Action → Maintenance. At each stage, tasks are assigned and adjusted based on real-time behavioral data.

To optimize effectiveness, we conduct A/B testing to identify the most impactful intervention combinations, iterating until the best formula is found.

The Metrics That Matter

So how to design the metrics for the whole system to evaluate the effect? The key metric is repeat offense rate—how often players break the rules again after an intervention. We compare this with other players to see if there's improvement. If the rate goes down or stays the same, it means the system is helping and we’re reducing the need for long bans.

Next, we also track if these returning players are causing problems again. We monitor things like player reports for toxic behavior to make sure the game stays safe for everyone.

Finally, we check if these players are adding value to the game—are they spending more, staying longer, and playing more positively. Our data shows that a well-deployed intervention system can reduce repeat offenses by 10–15% and cut related player reports by 3–5%.

Conclusion

Gaming communities thrive when players feel supported, respected, and fairly treated. At ACE, we believe that integrating behavioral science with game design not only protects the community but empowers it. By moving beyond punishment and embracing proactive interventions, we’re building safer, more enjoyable spaces for everyone. If you're a developer or game operator, we encourage you to explore intervention models in your own games. The results might surprise you.

"The goal isn’t to punish players, but to reveal better versions of themselves."